Laboratory for Parallel Numerical Algorithms

Research Topics

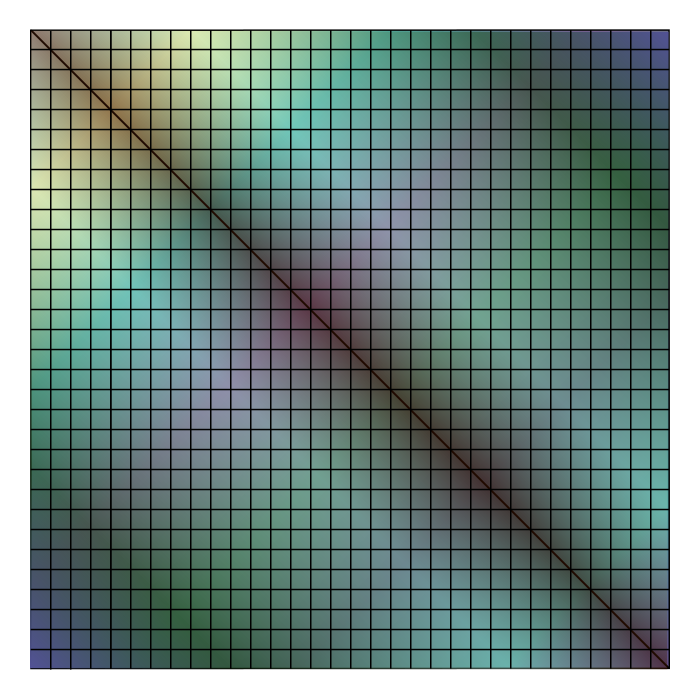

Tensor Algebra: Multidimensional data (ubiquitous in scientific computing and machine learning) can be effectively treated via tensor abstractions.

Dense and sparse tensor algebra, tensor decompositions, and tensor networks pose challenges in design of efficiency, software abstractions, and numerical methods.

Matrix Computations: Numerical linear algebra underlies most computational approaches in the data sciences.

Fast matrix algorithms provide solutions for nonlinear optimization, low-rank approximation, and eigenvalue problems.

Quantum Systems: Tensor representations provide the most natural way to computationally model entanglement (correlation between electrons).

We investigate numerical parallel algorithms for tensor computations arising in quantum chemistry (e.g. high-accuracy electronic structure calculations) and quantum computation (e.g. quantum circuit simulation).

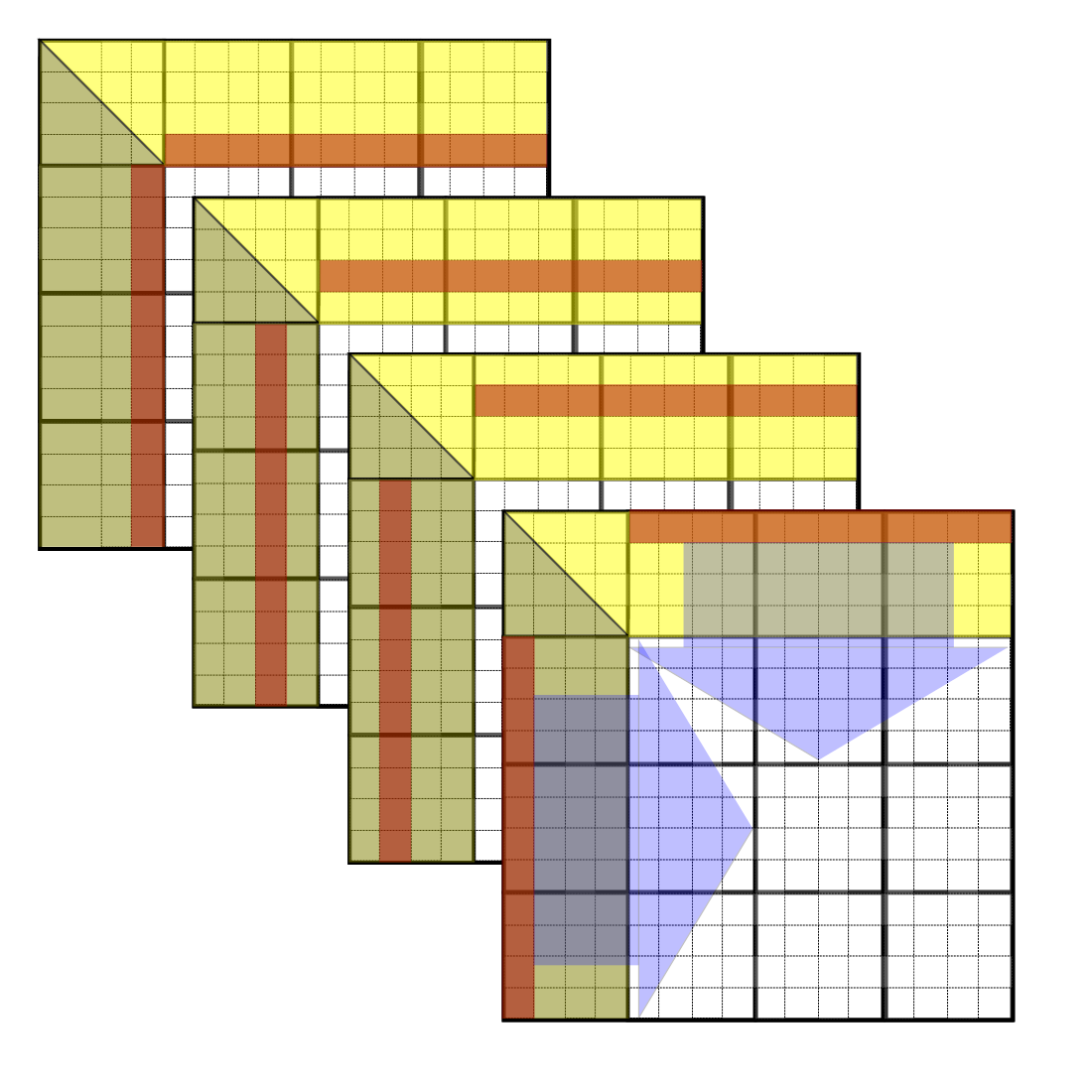

Communication-Avoiding Algorithms: Performance and scalability of algorithms and libraries is constrained by data movement in the memory hierarchy and network.

We aim to design parallel algorithms that minimize the amount of communication and number of messages.

Our group designs such algorithms for problems from a variety of domains, including graph problems, parallel sorting, bioinformatics, and numerical tensor computations.

High Performance Numerical Libraries: Parallel numerical libraries are the glue between fast algorithms and real-world applications.

We pursue application-driven research on algorithms by way of developing general and scalable library routines.

News

(March 2024) We have new funding for research on tensor approximations in solvers for nonlinear optimization problems and high-dimensional PDEs. Please contact Edgar if interested in a postdoctoral researcher position or in a research appointment (for current graduate/undergraduate students or prospective visitors).

Software

a suite of parallel algorithms for matrix factorization and eigendecomposition

Sponsors

Openings

We are always looking for new collaborators and participants. If you would like to pursue a position in our lab as an undergraduate researcher, academic visitor, or postdoctoral researcher, or are currently a MS/PhD student at UIUC interested in working with us, please contact Edgar Solomonik (solomon2@illinois.edu).

Members

We are part of the scientific computing group at the University of Illinois at Urbana-Champaign.Faculty

Associate Professor

Postdoctoral Researchers

Graduate Students

Undergraduate Students

Chandani Grover (CS)Sreevardhan Atyam (CS and Physics)

Aniket Deshpande (Physics)

Anitej Chanda (CS)

Bartu Koksal (CS)

Christopher Suarez (CS and Math)

Prior Participants

Postdoctoral Researchers

Raghavendra Kanakagiri (2021-2023)PhD Theses

Edward Hutter (2024): Accelerated performance modeling and tuning of parallel programsLinjian Ma (2023): Towards efficient algorithms and systems for tensor decompositions and tensor networks

Samah Karim (2022): Inexact interior point methods for constrained convex quadratic optimization problems

Master Theses

Wentao Yang (2022): Optimal round and sample-size complexity for partitioning in parallel sortingNavjot Singh (2020): Parallel Gauss-Newton method for CP decomposition

Tobias Wicky (2017): A communication-avoiding algorithm for solving linear systems of equations with selective inversion

Bachelor Theses

Yiqing Zhou (2020), Tianyi Hao (2020), Youcef Hadjarab (2020), Zecheng Zhang (2019), Pavle Simonovic (2018), Edward Hutter (2017)Former Graduate and Undergraduate Students, and External Visitors

Bangyan Shi (CS) (2023-2024) , Aniruddha Sen (visiting from U Mass, summer 2023), Mengyuan (Mina) Sun (CS) (2021-2023), Changsheng Chen (CS) (2021-2023), Tim Baer (CS) (2019-2022), Raul Platero (CS) (2017-2021), Dipro Ray (CS) (2019-2021), Annika Dugad (academic visitor) (2019-2020), Caleb Ju (CS) (2018-2020), Yifan Zhang (Math, Eng Phys, and Stats) (2019-2020), Zhaoyu Wu (CS) (2018-2019), Hung Woei Neoh (Math & CS) (2018-2019), Hongru Yang (CS & Stats) (2018-2019), Yunxin (David) Zhang (CS) (2018-2019), Xiaoxiao Wu (2018-2019), Siyuan Zhang (2018-2019), Naijing Zhang (2017-2019), Fan Huang (2018), Eric Song (2018), Ruiqian Yao (2018), Eduardo Yap (2018), Peter Tatkowski (2018), Qile Zhi (2017), Thomas Warther (2017).Video Lectures

Workshop on Sparse Tensor Computations Playlist of participant talks, UIUC/Chicago, October 2023

E-NLA lecture (via youtube) Efficient Inexact Solvers in Numerical Optimization Methods October 2021 (Edgar)

web-course Tensor Computations Spring 2022; CS 598-EVS

web-course Numerical analysis Spring 2021; CS 450

web-course Parallel numerical algorithms Fall 2017; CS 554

video June 2017; LPNA Lecture; Basics of tensors (Edgar)

video June 2017; LPNA Lecture; Basics of communication complexity (Edgar)

web-course Communication cost analysis of algorithms Fall 2016; CS 598-EVS

Publications

All publications lead by LPNA students and postdocs.| article | Linjian Ma, Matthew Fishman, Edwin Miles Stoudenmire, and Edgar Solomonik. Tensor rank and other multipartite entanglement measures of graph states Quantum 8, 2024. |

| article | Raghavendra Kanakagiri and Edgar Solomonik Minimum cost loop nests for contraction of a sparse tensor with a tensor network ACM Symposium on Parallelism in Algorithms and Architectures (SPAA), June 2024. |

| article | Caleb Ju, Yifan Zhang, and Edgar Solomonik Communication lower bounds for nested bilinear algorithms Foundations of Computational Mathematics (FoCM), 2023. |

| article | Caleb Ju, Serif Yesil, Mengyuan Sun, Chandra Chekuri, and Edgar Solomonik Efficient parallel implementation of the multiplicative weight update method for graph-based linear programs arXiv:2307.03307 [cs.DC], July 2023. |

| article | Navjot Singh and Edgar Solomonik Alternating Mahalanobis distance minimization for stable and accurate CP decomposition SIAM Journal of Scientific Computing (SISC), 2023. |

| article | Edward Hutter and Edgar Solomonik. High-dimensional performance modeling via tensor completion ACM/IEEE Supercomputing Conference, November 2023. |

| article | Wentao Yang, Vipul Harsh, and Edgar Solomonik Optimal round and sample-size complexity for partitioning in parallel sorting ACM Symposium on Parallelism in Algorithms and Architectures (SPAA), June 2023. |

| article | Linjian Ma and Edgar Solomonik Cost-efficient Gaussian tensor network embeddings for tensor-structured inputs Conference on Neural Information Processing Systems (NeurIPS), 2022. |

| article | Samah Karim and Edgar Solomonik Efficient preconditioners for interior point methods via a new Schur-complement-based strategy SIAM Journal on Matrix Analysis and Applications (SIMAX), 2022. |

| article | Navjot Singh, Zecheng Zhang, Xiaoxiao Wu, Naijing Zhang, Siyuan Zhang, and Edgar Solomonik Distributed-memory tensor completion for generalized loss functions in Python using new sparse tensor kernels Journal of Parallel and Distributed Computing (JPDC), 2022. |

| article | Linjian Ma and Edgar Solomonik Accelerating alternating least squares for tensor decomposition by pairwise perturbation Numerical Linear Algebra with Applications (NLAA), 2022. |

| article | Tim Baer, Raghavendra Kanakagiri, and Edgar Solomonik Parallel minimum spanning forest computation using sparse matrix kernels SIAM Conference of Parallel Processing for Scientific Computing (SIAM PP), 2022. |

| article | Caleb Ju, Yifan Zhang, and Edgar Solomonik Communication lower bounds for nested bilinear algorithms arXiv:2107.09834 [cs.DC], July 2021. |

| article | Linjian Ma and Edgar Solomonik Fast and accurate randomized algorithms for low-rank tensor decompositions Conference on Neural Information Processing Systems (NeurIPS), 2021. |

| article | Edward Hutter and Edgar Solomonik. Confidence-based approximation for performance prediction using execution path analysis IEEE International Parallel and Distributed Processing Symposium (IPDPS), arXiv:2103.01304 [cs.DC], May 2021. |

| article | Linjian Ma and Edgar Solomonik Efficient parallel CP decomposition with pairwise perturbation and multi-sweep dimension tree IEEE International Parallel and Distributed Processing Symposium (IPDPS), arXiv:2010.12056 [cs.DC], May 2021. |

| article | Yuchen Pang, Tianyi Hao, Annika Dugad, Yiqing Zhou, and Edgar Solomonik Efficient 2D tensor network simulation of quantum systems ACM/IEEE Supercomputing Conference (SC), Atlanta, GA, November 2020. |

| article | Caleb Ju and Edgar Solomonik Derivation and analysis of fast bilinear algorithms for convolution SIAM Review, 2020. |

| article | Linjian Ma, Jiayu Ye, and Edgar Solomonik AutoHOOT: Automatic High-Order Optimization for Tensors International Conference on Parallel Architectures and Compilation Techniques (PACT), October 2020. |

| article | Yifan Zhang and Edgar Solomonik On stability of tensor networks and canonical forms arXiv:2001.01191 [math.NA], January 2020. |

| article | Navjot Singh, Linjian Ma, Hongru Yang, and Edgar Solomonik Comparison of accuracy and scalability of Gauss-Newton and alternating least squares for CP decomposition SIAM Journal of Scientific Computing (SISC), October 2019. |

| article | Edward Hutter and Edgar Solomonik Communication-avoiding Cholesky-QR2 for rectangular matrices IEEE International Parallel and Distributed Processing Symposium (IPDPS), Rio de Jianero, Brazil, May 2019. |

| article | Tobias Wicky, Edgar Solomonik, and Torsten Hoefler Communication-avoiding parallel algorithms for solving triangular systems of linear equations IEEE International Parallel and Distributed Processing Symposium (IPDPS), Orlando, FL, June 2017, pp. 678-687. |